Eric Barnard. Iterations

Figuring it out... one step at a time.

Sunday, January 6, 2013

My First Book is Out!

I'm pretty excited to announce that my first book, KnockoutJS Starter, was recently published. If my blog looked dead for the past several months, it was due to this little project (and a few other things).

Please check it out and leave any feedback/comments here. I would like to write another JavaScript book and constructive feedback would be really appreciated!

KnockoutJS Starter - Publisher Site

KnockoutJS Starter - Amazon

Tuesday, July 31, 2012

Debugging ELMAH on Azure

ELMAH worked on my machine, but not in the cloud.

Needless to say, if your exception logging isn't working, then you're probably not going to sleep well at night. So my first question, naturally, was "How in the world do I see what is going on inside ELMAH?".

One thing that many folks don't realize until they need to do something like this is that logging libraries tend to "eat" many of their internal exceptions since they are usually the layer that is added to take care of exactly that. I say they "eat" their exceptions not in a strict sense, but more so because there really is no where you can do a "TRY CATCH" to see what is happening.

Tracing to the Rescue

Instead of throwing exceptions both ELMAH and Log4net output their exceptions to the System.Diagnostics.Trace object. This is sweet when debugging locally, as any application traces will automatically write to the Output window in Visual Studio. But as the title of this post reads, that doesn't help me at all.

public class Log4netTraceListener : System.Diagnostics.TraceListener

{

private static readonly log4net.ILog _log;

public Log4netTraceListener()

{

_log = log4net.LogManager.GetLogger(typeof(Log4netTraceListener));

}

public override void Write(string message)

{

if (_log != null)

{

_log.Debug(message);

}

}

public override void WriteLine(string message)

{

if (_log != null)

{

_log.Debug(message);

}

}

}

Now, I first tried adding my Log4netTraceListener in the web.config. However, this Listener is initialized and added to the "Trace.Listeners" collection before Log4net has initialized in my web application. So instead, I have to add it programmatically during the application startup. I did the following in my Global.asax.cs:

System.Diagnostics.Trace.Listeners.Add(new Log4netTraceListener());

After all of that, I finally started seeing the following in my Log4net log:

System.Data.SqlClient.SqlException (0x80131904): Tables without a clustered index are not supported in this version of SQL Server. Please create a clustered index and try again.Whoa, that is not something I expected to see. Apparently the "ELMAH_Error" table has a non-clustered primary key and no clustered index on the table (not really sure why). So we have two options:

- Add another column to the table and setup a clustered index on that column.

- The "Sequence" column is already an "int IDENTITY(1,1)" column - so just setup a clustered index on that column.

CREATE CLUSTERED INDEX [IC_Elmah_Sequence] on dbo.[ELMAH_Error] (Sequence)

Wednesday, July 4, 2012

Getting Started with LocalDB

With the launch of SQL Server 2012, a new product called "LocalDB" was launched. This new toy is a great fit for needs that fall somewhere in the space between SQL Express and SQL Compact.

While you can read the full list of features for LocalDB, I'll highlight a few below:

- Easy, and simple MSI to install. I've had very little problems with this, and it is light-years better than trying to setup SQL Express

- Ability to control user access to the database

- Supports virtually all features of SQL Express (Stored Procedures, Triggers, ... even some basic Replication)

- Not an extra service on your machine to manage

Initially, there was some confusion as to whether LocalDB was high-powered file-based database or just a dumbed-down version of SQL Express. I'm not going to debate the semantics of what it is exactly, but I will tell you that you get your standard ".mdf" and ".ldf" files with LocalDB. However, the MSI installs some central libraries and an engine that power the LocalDB instances. The engine does have to have some knowledge as to the existence of any instances of LocalDB in order for those to work. So if you were looking for something where you can just drop a file into a directory and it will just work... not quite, but close.

Instances

LocalDB has the notion of "Automatic" and "Named" instances. Per version of LocalDB installed on your machine, there is only one "Automatic" instance per user. You can think of this as the default "SQLEXPRESS" instance that is installed when you first download and install SQL Express on a machine, only it is unique per user on the machine. So if you create an application that creates and uses the "Automatic" instance of LocalDB and this application is installed on a machine with 3 different user accounts, you will have 3 different ".mdf" and ".ldf" files after each user logs in and uses the application the first time. This "Automatic" instance is:

- "Public" – meaning any application process under the user's account can access the instance when logged into a machine

- Accessed via the connection string "(localdb)\{major version}" – which for right now means you'll use "(localdb)\v11.0"

- Great for development purposes when you need to just throw together a DB and ship something

Named Instances

The "Named" instance of LocalDB refers to private instances of a database that you can setup. These are useful if you want an application to have a separate, private database per user on a machine (a "Named" instance will run in a separate process under the current user on a machine).

Sharing Instances

So what if you want to allow multiple users on a machine to have access to a single LocalDB database instance? Well you can also "share" a "Named" instance of LocalDB. You can control who the instance is shared with, and you can enable/disable sharing at any time.

When connecting with a shared instance, your connection string will need to include an extra ".\" in the server portion. Going with the example above, one would use: "(localdb)\.\MySharedInstance". This tells the engine that this is a shared instance.

SqlLocalDb Utility

When you install LocalDB on a machine, it also installs the SqlLocalDb command line utility. It's pretty simple to use, and allows you to setup batch files to control the creation of your LocalDB's. One of the first things I do is create a "SetupDb.cmd" file in my source code folder of an app I'm building. This allows other devs to easily replicate setting up a development database on their machine in the same way.

Where Does Everything Go?

One of my first questions was, where are the actual database files?

The default is: "C:\Users\{USER}\AppData\Local\Microsoft\Microsoft SQL Server Local DB\Instances"

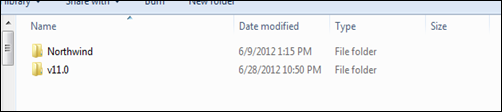

If I've created the "Northwind" Named instance as above, and I go to the default folder, I will see:

Inside each of these folders are your typical "master.mdf" , "temp.mdf", log files, etc... If you want to create your database's ".mdf" and ".ldf" files in a separate directory, you can easily do that by specifying the location when running a T-SQL statement to "CREATE DATABASE ...". Specifying where your data lives is not really any different than what you've done with SQL Server in the past.

If you want to point your application to specific '.mdf" or ".ldf" files at runtime, you can specify that in your connection string using the "AttachDbFileName" attribute, you can also read more here.

Wednesday, February 29, 2012

Blog Moveage

So I recently ported my blog over to Blogger. I’ve been using Wordpress on GoDaddy for a little over a year, and I started to realize:

Blogger Cost: $0.00

GoDaddy Cost: $5.00/month

Well, it doesn’t take a rocket scientist to figure out why to make the move.

Also, many times I noticed that my blog would take up to 4 to 5 seconds to respond. I know I get a ton of traffic, so it was kind of sad that with maybe one or two hits a month, GoDaddy wouldn’t even give me decent enough resources for my $5.

Google/Blogger – free and awesome – Use it.

Tuesday, November 1, 2011

JavaScript Basics–Native Stuff

For a while I’ve been wanting to write about some core things that I’ve learned while creating complex JavaScript applications. Not necessarily ‘begginer’ things, but I guess someone could use these as so.

I also build on the .NET platform. Think what you will, but I like it… and I’ve written Ruby (liked it too), and love JavaScript (a dynamic language at that) development.

First off JavaScript does have some core types that should be understood. My attempt to describe them below is for someone coming from a strongly typed world:

| Boolean | true/false |

| String | character array |

| Number | float (can be used as integer, decimal, etc…) |

| Null | null |

| Undefined | basically non-existent, values can’t be initialized to ‘undefined’ |

| Object | essentially a dictionary/hash table of key values |

| Array | not really a true array, but an object with numerical keys, and native manipulation functions. Think more ‘special object’ than data structure |

| Function | objects that can be invoked, but still can exist as singular objects |

So lets address the first big question, what’s the difference between Null and Undefined? Null and Undefined are very similar, however Undefined seems to be what most browsers use to indicate something hasn’t been declared or initialized. Null is something that is used when you perhaps want to initialize an object or property, but don’t want it to have a value.

Ok, so how about this Array vs Object thing? First off, Arrays DO have the following properties/methods:

var arr = [];

arr.length;

arr.push

arr.shift;

arr.unshift;

arr.splice;

arr.slice;

arr.pop;

arr.join;

arr.concat;

I won’t get into those here, especially when you have this

However, depending on the browser that you are using, these are not usually the super-efficient data structures that we’re used to. They are still very useful, I just want you to be informed.

Lastly, what this Function thing?

Functions, like I mentioned, can be invoked and have parameters passed to them (they can do a lot more, but I can’t put it all in one post, right?) Essentially a Function is an object that you can create and execute logic inside. You can hang it off of a regular object and make a method (like we’re normally used to) or some other fancy things we’ll get to.

Here’s a decent example of building out a basic object:

var car = {}; //a new object

car.name = "Corvette"; //a string

car.maxSpeed = 155; //a number

car.currentSpeed = null; //initializing to null

car.honk = function(){ //a function

alert("Honk");

};

A couple things you may have noticed so far… creating new objects and arrays.

var arr = [];

//Dont' do

var arr = new Array();

var obj = {};

//Don't do

var obj = new Object();

Use the shorthand and not the “new” keyword for native objects. Its pointless and makes you look like you don’t know what you’re doing.

Monday, August 8, 2011

SQL Script Generation–Column Names

When I’m doing heavy database development, one of the biggest annoyances I run into is having to hand key (or clumsily generate) the column names for stored procedures (especially those containing MERGE statements). I really wished I could use something to quickly output the columns to text so I could copy and paste the formatted column names as I needed them… well voila.

Our head DBA always has a few tricks up his sleeve, so we setup a system stored procedure to print out the column names (comma separated of course) for what ever table we want.

The PROC allows you to specify:

- Table Name (as it appears in the SYS.tables table)

- ‘L’ or ‘W’ depending if you want the columns listed with a {CRLF} after each column (‘L’) or all on one line (‘W)

- A ColumnName prefix (for doing table aliases)

- A table schema (if you have other table schema’s besides ‘dbo’)

Here’s the code (make sure to look at the bottom)

USE [master]

GO

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE PROCEDURE [dbo].[sp_listcolumns]

@table SYSNAME,

@list CHAR(1) = 'L',

@prefix SYSNAME = '',

@schema SYSNAME = 'dbo'

AS

BEGIN

SET NOCOUNT ON;

SET QUOTED_IDENTIFIER OFF

DECLARE @columnlist NVARCHAR(4000)

,@colctr INT = 1

,@sqlcmd NVARCHAR(500)

CREATE TABLE #column

(

ColumnName NVARCHAR(256),

Ordinal INT

)

CREATE UNIQUE CLUSTERED INDEX IC_ColumnList99 ON #column (Ordinal)

SET @columnlist = ''

SET @table = LTRIM(RTRIM(@table))

SET @list = LTRIM(RTRIM(@list))

SET @prefix = LTRIM(RTRIM(@prefix))

SET @schema = LTRIM(RTRIM(@schema))

INSERT INTO #column (ColumnName, Ordinal)

SELECT '['+LTRIM(RTRIM(COLUMN_NAME))+']', ORDINAL_POSITION

FROM INFORMATION_SCHEMA.COLUMNS

WHERE TABLE_NAME = @table AND

TABLE_SCHEMA = @schema

ORDER BY ORDINAL_POSITION

-- Check to make sure we actually got usuable input from the User

IF ((SELECT COUNT(*) FROM #column) = 0)

RAISERROR('Bad Table Information - Please Try Again', 16, 1, 1)

IF(@prefix <> '')

BEGIN

UPDATE #column

SET ColumnName = @prefix + ColumnName

END

IF(@list = 'L')

BEGIN

-- If it is 'L' just append a ',' to each line, output would be:

-- TestCol1,

-- TestCol2

UPDATE C

SET @columnlist = @columnlist + C.ColumnName + ',' + CHAR(10)

FROM #column C

END

ELSE

BEGIN

-- else we output a 'w' where it just a ',' separated list of Column names

UPDATE C

SET @columnlist = @columnlist + C.ColumnName + ','

FROM #column C

-- Remove the last comma

SET @columnlist = LEFT(@columnlist,LEN(@columnlist) - 1)

END

-- Output the results so that they can be nicely copy

-- and pasted from the comment window

PRINT(@columnlist)

SET QUOTED_IDENTIFIER ON

SET NOCOUNT OFF

END

Lastly we need to add this as a system proc, or else it never changes its scope to the DB you are running it in

EXECUTE sp_MS_marksystemobject 'sp_listcolumns'

After we have this proc build and added, we can get output like this:

CREATE TABLE dbo.Test

(

Id UNIQUEIDENTIFIER

,TestCol1 NVARCHAR(64)

,TestCol2 INT

,TestCol3 BIT

)

GO

sp_listcolumns 'test'

--Outputs:

[Id],

[TestCol1],

[TestCol2],

[TestCol3],

--

sp_listcolumns 'test','w'

--Outputs:

[Id],[TestCol1],[TestCol2],[TestCol3]

--

sp_listcolumns 'test','l','testTable.'

--Outputs:

testTable.[Id],

testTable.[TestCol1],

testTable.[TestCol2],

testTable.[TestCol3],

--

sp_listcolumns 'test','w','testTable.'

--Outputs:

testTable.[Id],testTable.[TestCol1],testTable.[TestCol2],testTable.[TestCol3]